Table of Contents

Optimizing a Pipelined CPU

Brendan Caporaletti, Chelsea Bailey, Jeffrey Hanford

Code: https://www.dropbox.com/s/2ulskrgce5xltpq/CPU_ResourceFiles.zip

The Vision

We made an optimized, 32-bit Pipelined CPU with features including: hazard avoidance, branch prediction, and MIPS compliance.

We began by creating a working 32-bit CPU with static branch prediction and data forwarding that was not quite compliant with MIPS architecture. The goal of this project was to use that CPU as a base for exploring optimization and timing. We then began the process of implementing new features over various iterations and comparing the pros and cons of each new version. The what, why, and how of each version are explained in much greater detail below.

This project appealed to all of us because it allowed us to build upon our knowledge gained in the final lab in Computer Architecture. That lab gave the assignment of creating a 32-bit MIPS compliant single-cycle CPU. We wanted to take that idea even further, challenging ourselves to create not only a pipelined CPU, but one with hazard avoidance. Beyond that we were fascinated by how CPUs have been optimized over time. We chose this final project, because of all the areas it allowed us to engage in: research of optimization, design of various systems, and finally synthesis with timing analysis.

V1 The Original CPU

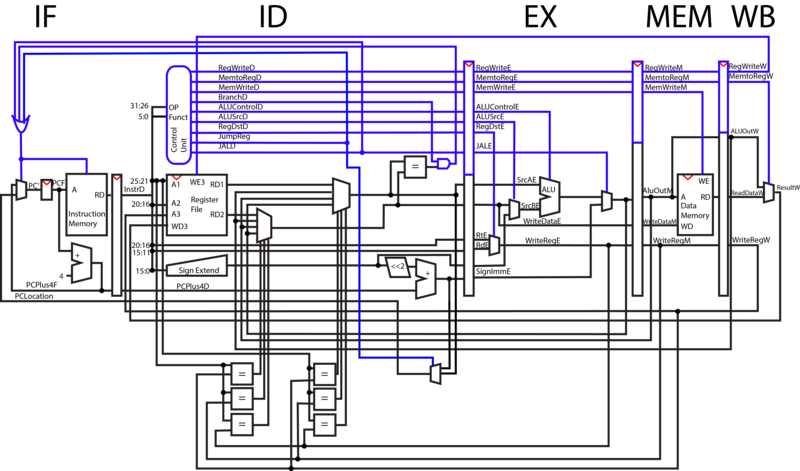

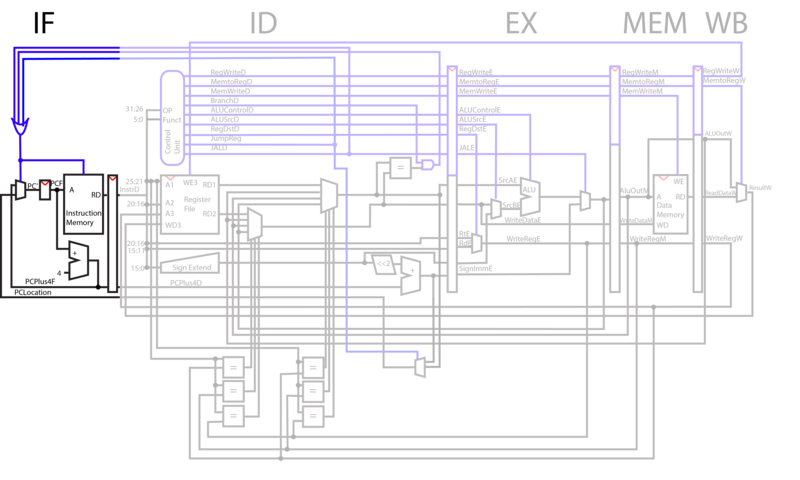

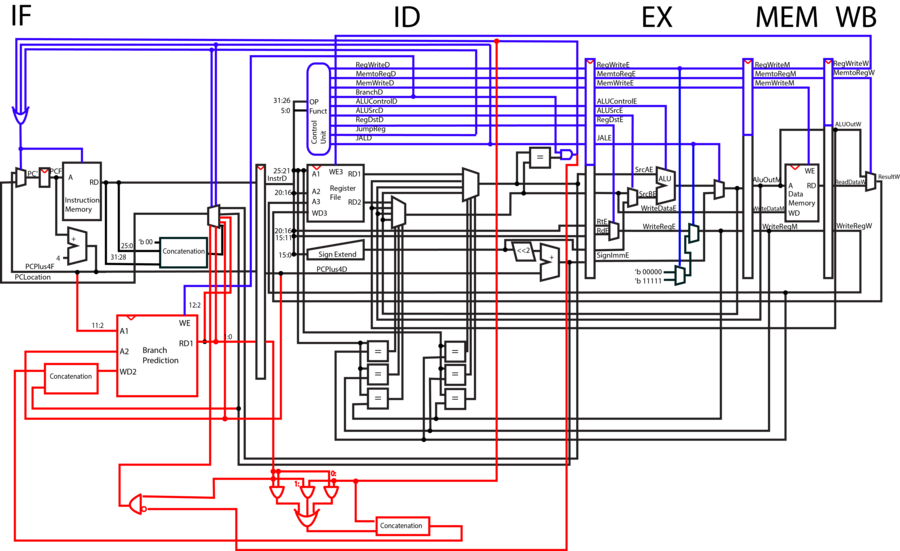

IF

During the instruction fetch phase, the output of the Program Counter is fed into instruction memory. Instruction memory will pull the instructions from the register that corresponds to the input PC value and output them to the next stage of the CPU. The value of PC is then incremented by 4, as the addresses of instruction registers differ by 4. The mux selects to either take PC+4, or an input register to jump to based on the selector bit. This selection is based on whether or not at least one of three different jump control lines is high.

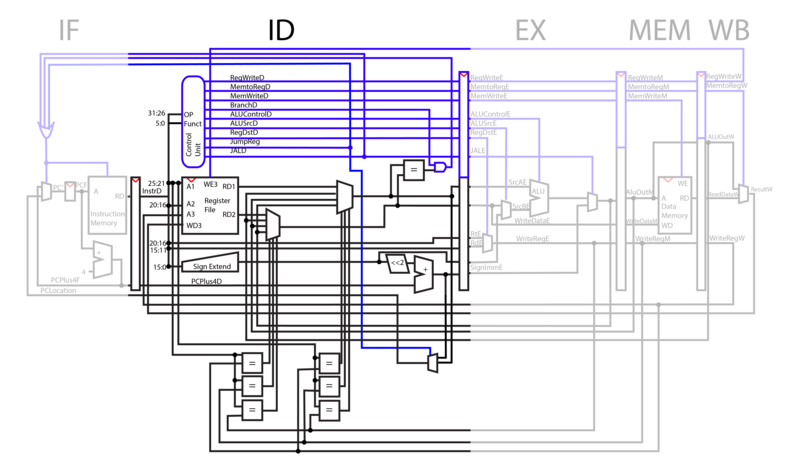

ID

During the instruction decode phase, parts of the opcode are sent to the control unit, which decodes the two inputs, determines the function that those inputs correspond to, and outputs ones or zeros through each control line depending on the function.

Two addresses are pulled from the opcode and fed into the register file, which pulls the corresponding registers from the addresses.

As part of our pipeline optimization, the six comparators at the bottom of the diagram check to see if later registers being requested in later phases of the CPU are the same as the ones being operated on. If the registers are the same, the ID phase outputs the updated data instead of the original, outdated information in that register that has not yet been written back to.

In a jump operation, the amount it should jump by is contained within 16 bits of the opcode. This immediate is fed through a sign extender, shifted by two, added to the current PC number and output to the next previous of the CPU. If this is a jump and link, Jal and RegWrite are put high. WriteReg allows us to write the PC to the register file. Jal selects the PC+4 to replace the ALUOut and to replace the WriteReg with register 31. If this is a normal Jump Jal is set high but RegWrite is not. Therefore the register will not be set despite Jal being on. Forwarding ignores register 0 in order to avoid forwarding of Jumps writing to register 31. In either case Jal forces PC to jump to the Branch location.

If WriteEnable is high, the number being fed into WD3 is written to the write register A3 from the writeback phase.

In order for us to avoid the number of No-Ops that incorrect branching would entail, we moved our BNE comparator into the ID phase. This way we only have to cancel a single instruction upon branching. Our method of branch prediction in this model is static. We always assume that we are not branching, until the comparator tells us otherwise.

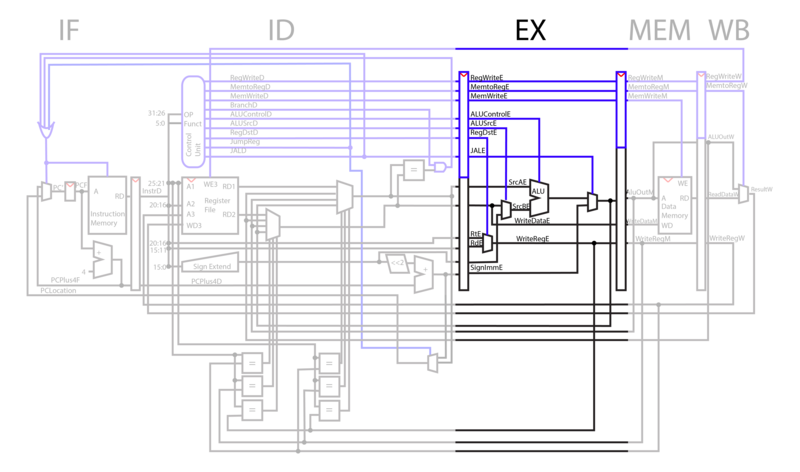

EX

In the execution phase, ALU operations are performed on the registers pulled from the instruction decode phase. The ALU operation selected depends on the ALUControl line. For add immediate, instead of adding RD2 to RD1, the CPU selects the immediate line from ID and adds it to RD1 using the ALUSrc selector bit.

The register that is to be written to is decided by the RegDst line. In operations like Add, where two registers are added and a third is written to, Rd is used. In operations like add immediate, where only one of the input registers to register file is used, Rt is used.

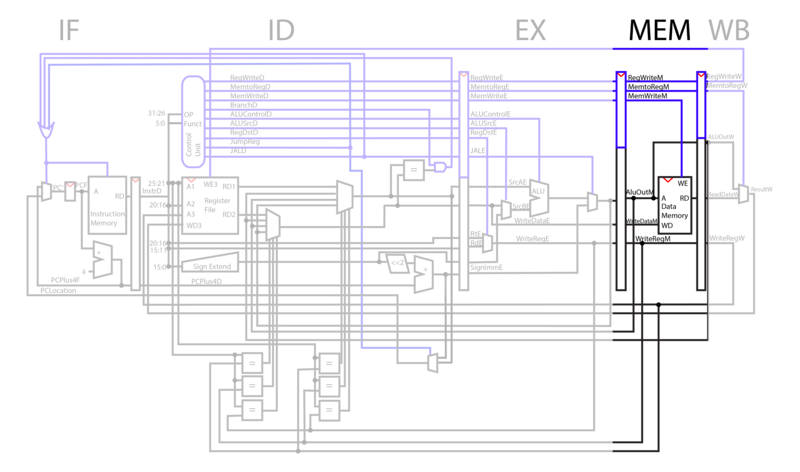

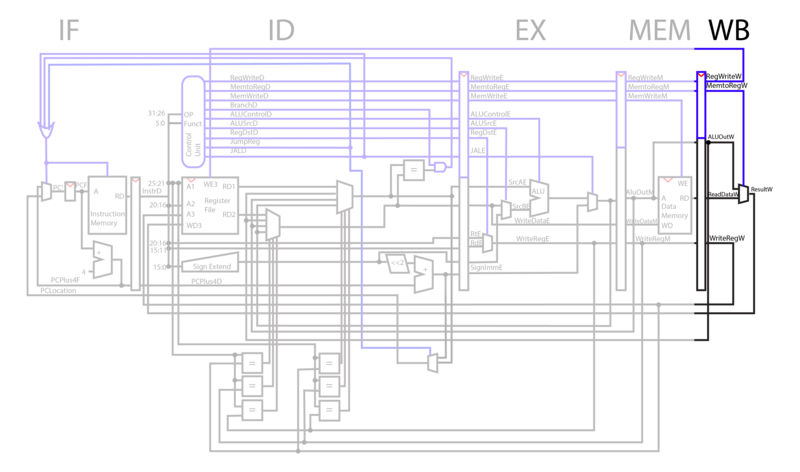

MEM

In the MEM phase, WriteData is written to the AluOut address if MemWrite is high. When MemWrite is low, data is read from WriteData register and output to RD.

WB

In the WriteBack phase of the CPU, the selector line MemtoReg selects whether to use the memory output or the ALU output. Result from WriteBack is fed into the register file in ID, and if WriteEnable is high, the data is written to WriteReg register from writeback.

Improvements

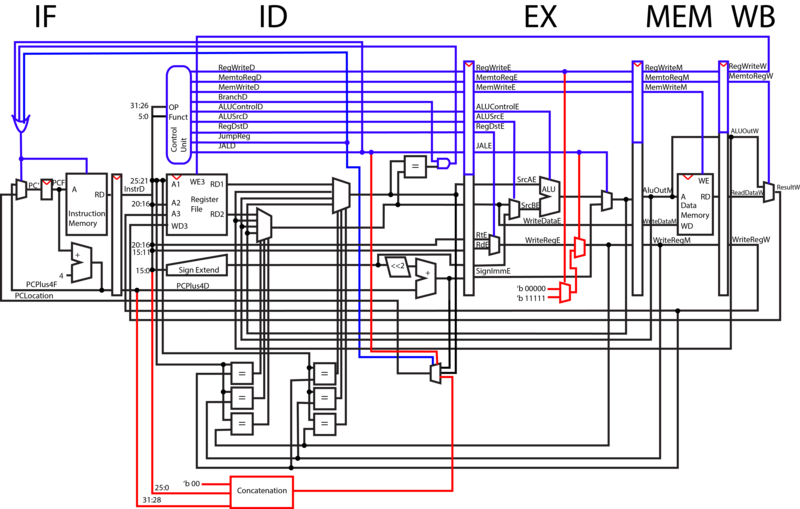

V2 MIPS Compliance

In our original CPU design, we treated branching in the same method as jumping, which was a different implementation from MIPS. To make our CPU fully MIPS compliant we had to add concatenation into the ID phase where we do our jumping. According to MIPS, branch commands are based on a delta where as jump commands require a target destination. The jump machine codes provides 26 bits which when concatenated with two 0's in the low order bits and the 4 highest order bits of the PC, provides the jump destination.

In order to use all 26 bits of immediate normal Jump operations must forcibly have their WriteReg set to 0 as to avoid errors in forwarding. This is why the RegWrite line, being the difference between Jump and Jal, controls whether Jal will force WriteReg to be 31 or 0.

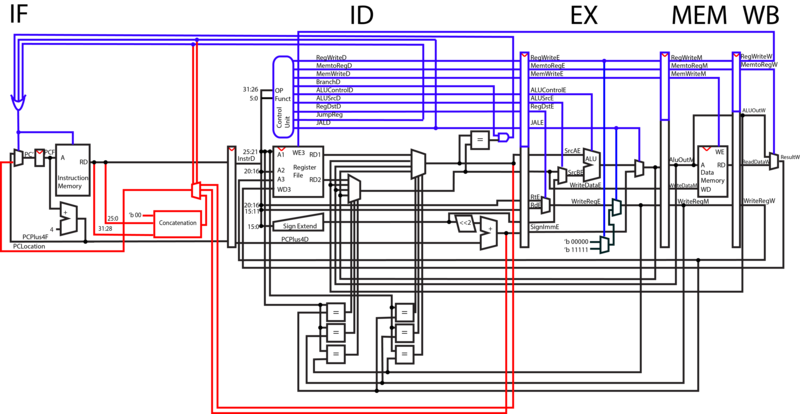

V3 Jump Up

Once our design was MIPS compliant, it allowed us to implement another feature. The concatenation made jumping much quicker and therefore we decided to move it up into the IF phase. The sooner jumping can happen the less clock cycles we waste.

V4 Dynamic Branch Prediction

Our original solution for hazards related to branching/jumping was static branch prediction. We always assumed that we were not branching. When we began our final project, we knew that our branch prediction was area in which we greatly wanted to add improvements. After much research we settled on dynamic 2-bit prediction.

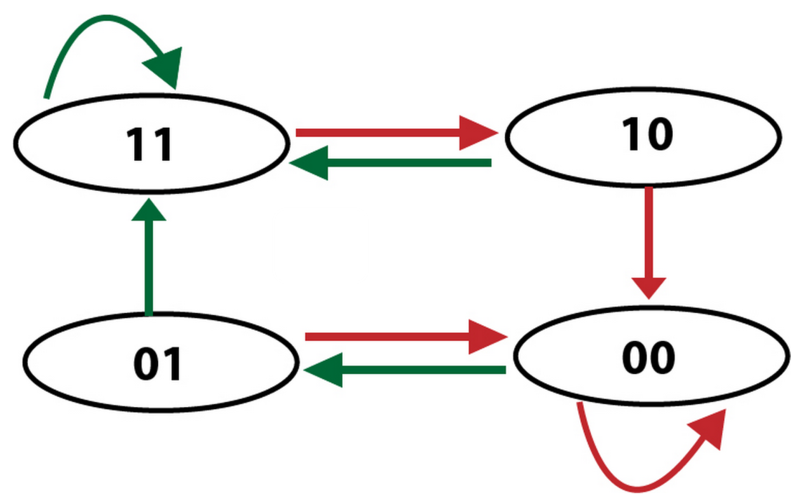

The idea behind dynamic prediction is that we are always changing our guess based on the accuracy of the previous guess. In the simplest case this is a one bit value. When a branch is taken the value is set to 1. When it is later not taken the value is set back to 0. A 2-bit predictor allows us slightly more accuracy. The diagram below explains how our 2-bit predictor works in the form of a finite state machine. The four states are strongly taken (11), weakly taken (10), weakly not taken (01), and strongly not taken (00). The green arrows represent when a branch was taken and the red arrows represent when a branch was not taken. Every time a branch is taken or not taken it follows the path dictated by those arrows and the state it is currently in.

Beyond the number of bits for prediction, there are still other details to be determined. For example, we could have implemented a global predictor. The global predictor merely stores one 2-bit value and uses this single value to determine action for all branches. We instead decided to create a cache and store a unique branch predictor value for each of the branches in the code. The figure below is a visualization of how this cache works. We index into the cache using low-order bits from the PC. In the rows of the cache we store not only the 2 bit predictor value, but also the branch destination. This way we do not have to recalculate the branch destination anytime after the first time the branch is taken.

To figure out how to implement this new way of branching, we relied on some of our oldest knowledge from Computer Architecture. First we generated the following truth table:

| State | Taken | New State |

|---|---|---|

| AB | C | DE |

| 00 | 0 | 00 |

| 00 | 1 | 01 |

| 01 | 0 | 00 |

| 01 | 1 | 11 |

| 10 | 0 | 00 |

| 10 | 1 | 11 |

| 11 | 0 | 10 |

| 11 | 1 | 11 |

Then we used Karnaugh maps for each bit of the new state to determine the simplest logic that could be implemented. Those maps are shown below, followed by the final implementation that was settled on added to the CPU in red.

| A B | A !B | !A !B | !A B | |

|---|---|---|---|---|

| C | 1 | 1 | 0 | 1 |

| !C | 1 | 0 | 0 | 0 |

D = AB+BC+AC

| A B | A !B | !A !B | !A B | |

|---|---|---|---|---|

| C | 1 | 1 | 1 | 1 |

| !C | 0 | 0 | 0 | 0 |

E = C

Reflection

The whole process of this project was incredibly gratifying and overall successful, but we did face a lot of difficulties along the way. One of our biggest hurdles was synthesizing our design. None of us had previous experience with synthesis using XILINX and it had a good bit of a learning curve for us to surmount. That is why we did not go as much into timing as we wish we could have. On the bright side, we spent a lot more time doing research, design, and implementation. This turned out to be the most interesting and beneficial part of the project so it is where we exerted most of our effort.

We also put a lot of emphasis on the documentation. From the very start it was most important to us that whatever work we got done was incredibly well documented to be helpful to future classes. In this regard, we feel we succeeded. We worked very hard to make our diagrams comprehensive and understandable. We put a lot of thought into how we would describe our implementations so they would be as intuitive as possible.

Looking back on our work plan, shown below, we were fairly accurate with our time predictions. The huge difference is that our final demo took a different form from our original plan. Although we had intended to do speed comparisons and show the timing differences, we earlier realized that we had bitten off more than we could chew. It consumed all of our time to simply focus on researching improvements, figuring out implementation, and then getting them all to a fully functional state in Verilog. We still had enough time to analyze the big picture of how our timings compared, although we did not do a lot of specific time testing through synthesis.

If we had more time on this project our TODO would surely be to fully test the timing of each phase of each individual version of our CPU through synthesis with XILINX. That being said, we are completely happy with the work we managed to do during our time working on this project. We are proud of our outcome and each had an incredibly valuable experience.

Goal: Optimize and document beautifully our pipelined CPU from MP3. Final Demo: A presentation of speed tests and comparisons of simple programs on our original CPU to the optimized CPU. Schedule: Study timing and balancing of CPU: Dec 11: 10 hours Implement new design features for speed optimization: Dec 17: 20 hours Documentation: Dec 19: 10 hours