Table of Contents

Fibre Optic Video Transmission for Aircraft: Implementation

By Steven Cooreman

What did you do?

I made a blazing fast video signal converter in VHDL.

Traditionally, transmission of critical video signals in aircraft has been done as an analog signal over copper coaxial cable. However, as manufacturers look for new ways to reduce the take-off weight of aircraft, the focus has been shifted to Plastic Optical Fiber (POF). This requires a new protocol to transmit video, because optical fiber can realistically only carry digital signals. The standards body that governs aircraft electronics has thus drafted the ARINC-818 spec. This specs the transmission of uncompressed video, requiring a link speed of 1.0625 to 8.5 Gbit/s depending on the resolution.

The company I am working with wants to introduce this new interface in their line of cockpit displays, and therefore needs to have an implementation created in-house of both a receiver (to incorporate in the display) and a transmitter (to test the functioning of that receiver). In the timeframe of this project, I worked on the transmitter, because it turns out to be the easiest part to implement.

Why did you do it?

I had a couple of different options when I was looking for a thesis subject. What made me choose this one were a couple of different reasons:

- I actually wanted to be an aerospace engineer first. This combines my passion for electronics with a link to the aircraft industry

- I get to both design hardware (already happened over summer) and write logic (happening now) in the same project, which is an awesome way to taste and gain experience in the relevant subjects.

- I get to solve an actual engineering challenge for a project that already has a deadline because it has been put on the roadmap. It is admittedly freaking me out a bit, but it also introduces the reality of being an engineer: deadlines are often outside your control. Make it happen.

How did you do it?

I first set out to read the actual specification of the ARINC-818 protocol. This protocol is packet-based, which means video data is encapsulated in packets, and then sent over the link. The protocol does not specify actual timing and data packetization formats, but leaves that up to an accompanying document for each implementation: the ICD. As a reference, I used the Great River XGA ICD. It stipulates that video is sent line-synchronous, which means that video data arrives line-by-line, and the line frequency is constant.

Between two different video frames, there are a couple of blank lines, to be able to synchronize to the video source. This is especially important because of timing issues that arise when trying to communicate at two different clocks: the speed at which the video comes in (dictated externally), and the fixed reference clock used for fiber transmission.

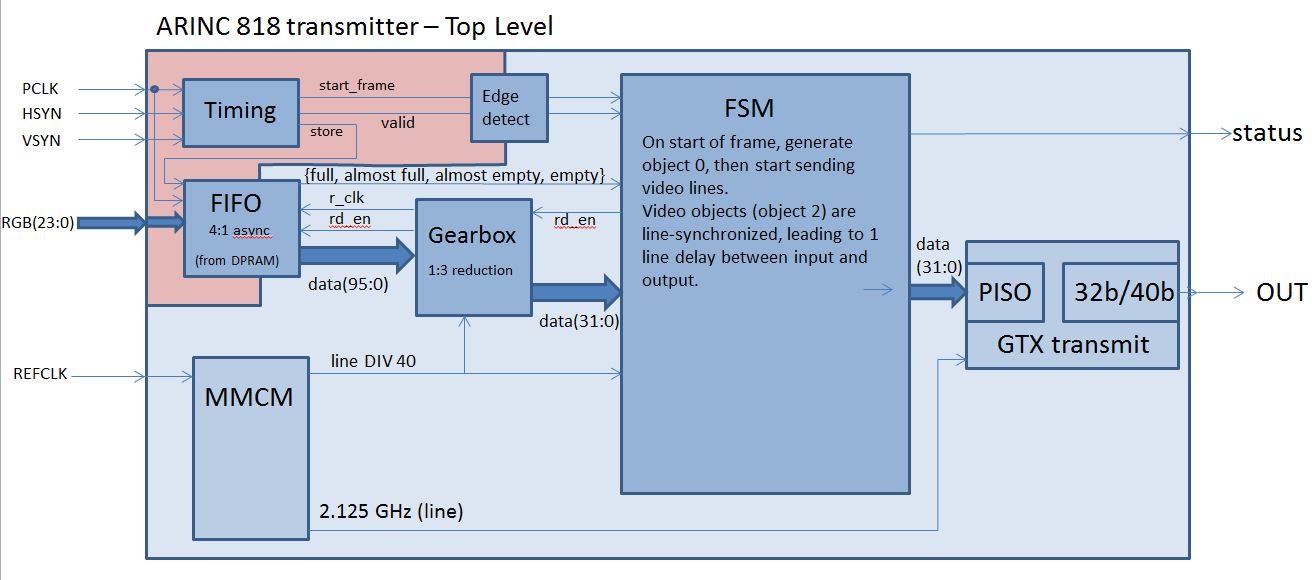

With this in mind, I started drawing a preliminary systems diagram. In order to successfully translate the video, I knew that I had to buffer it somehow, because the actual timing at which pixel data arrives (see XGA timing details w.r.t. blanking and sync pulses) varies. This was solved by adding a FIFO to buffer incoming pixels until they are ready to be sent out. The FIFO itself is implemented using on-chip Block RAM, and the implementation is taken care of by the Xilinx IPCore generator.

I did have the advantage that the analog video signal would be converted to a digital one outside of the FPGA, so I did not have to implement any ADC conversion.

Another part of the diagram was the timing checker. This module is intended to check the timing of the incoming video, and see whether it matches up with a supported resolution by measuring the horizontal and vertical line periods at the reference clock. If the resolution matches up, it indicates to the Finite State Machine that video is ready to be sent out. It also provides a pulse that indicates the start of a new frame, so that the packetizer can sync up to the incoming signal’s refresh rate. spec_timing.docx

Then comes the clocking. The whole thing runs off of a 106.25 MHz oscillator, because it is an integer fractional of the prospective 2.125 GHz, and so we can generate the transmit clock with a phase-locked loop. It also provides an opportunity to run our packetizer at exactly the right frequency for the transmission: we have to provide the transmitter 32 bytes for every 40 bytes sent, so the data needs to be clocked in 32 bytes at a time at 2.125 GHz / 40 = 53.125 MHz: exactly half of the reference clock.

This 32 bytes per 40 sent is a direct result of the encoding applied at the link level: 8b/10b encoding, 4 times in parallel. This type of encoding provides for a couple of benefits:

- Near-constant Running Disparity: this will ensure that at any given time, the total amount of 1’s and 0’s sent over the link will only be off by one. This is very important with conductor-based transmissions, to keep the average (reference) voltage on the link equal. However, it also ensures that the clock can always be recovered from the link, something that is also important with fiber optics.

- Basic error detection: because you introduce two extra bits per 8 data bits, and use one for the disparity, the other one will provide a rudimentary type of error checking: one of the states is legal, the other one is not. Simple as that.

- Introducing control codes: because every 8 bits have multiple possibilities to be converted to 10 bits, you can use one of them for your code, and another one to indicate a ‘special’ character. Very useful if you want to delimit random data that can take on any of the 256 values, and still want to be able to send control signals without first saying how many data bytes you are going to send, and have the receiving system count them out, as this is very error-prone.

Lastly, the state machine takes care of the generation of the packets. On reset, it will wait for the ‘valid’ signal to become true, indicating that valid video is being received. When valid video is present, it will wait until the start of a frame, reset (clear) the FIFO, wait a specified amount of time, and then transmit an “Object 0”. In ARINC818 terms, this is the start-of-frame object, which contains a couple of parameters relevant to the link status, video properties, number of the incoming frame, etc.

After that, it will wait for a predetermined amount of line intervals (because the spec is line-synchronous), and start issuing “Object 2” objects. It is determined by the ICD that each object 2 will have pixel data for half of a video line, in RGB format with 8 bits per color. This comes down to 24 bits per pixel. Since XGA is 1024 pixels wide, this amounts to 512*3 = 1536 bytes of pixel data (not including object header and CRC) per packet. XGA is also 768 lines high, thus 768*2 = 1536 object 2 packages have to be sent for one frame of video. When all of these packets have been sent, the FSM will wait for the next start-of-frame signal from the timing checker. It will do so on a line-synchronous basis, meaning that it will only start sending video on the start of a new blanking line. The concept of a blanking line is a relic from analog video where you needed time to set the cathode ray back to its home position. We still use the term because nothing better is available. In Fiber Channel, a blanking line is just subsequent “IDLE” characters for the time it would normally take to send a line of video.

How can others build on it?

While this is still a work-in-progress by me, and I plan to continue working on this, another person would need the following:

- A copy of the ARINC818 spec, available from the organization for a fee. An implementers guide is available upon request at http://www.arinc818.com/arinc-818-library.html

- A copy of the implementation-specific ICD (example enclosed)advb_ref_icd_xga_24rgb_60h_2g_p_lsync.pdf

- The necessary hardware (I designed a board with a Xilinx Kintex-7 FPGA specifically for this)

- Xilinx ISE tools with IPCores, and simulation libraries for the FPGA. I used Xilinx ISE version 13.1.

- Modelsim simulation tools. I used version 10.0 PE.

Because of confidentiality, the code is not provided for download on the wiki.

Implementation schematic

Build instructions

- Generate simulation libraries for Kintex-7 with the Xilinx-provided command-line tool ‘compxlib’. Look for the GUI version in your ISE installation folder.

- Import the generated libraries in Modelsim

- Import the FIFO files from the ipcore directory of the ISE project.

- Import the custom VHD files.

- Simulate tb_ARINC818

TODO list for the transmitter

- Implement the actual CRC calculation. Constant ‘C0FFEE11’ just doesn’t cut it.

- Investigate a better timing simulation model. Currently, Modelsim only accepts integer nanosecond periods, and my clocks are respectively 65MHz (incoming) and 53.125MHz (reference). These don’t map, and thus the rounding of the period means my simulation is not accurate.

- Calculate the necessary FIFO depth. This goes along with the point above. When I set it to worst-case (slow down incoming video and speed up outgoing to the next integer period), my 4Kbyte FIFO underflows… But then again, the simulated clocks are outside the specs for both sides…

- Implement running disparity checking. The only valid IDLE character assumes a negative running disparity, so the EOF characters need to take the running disparity negative. That is why there are two of them defined: a neutral and an inverting one. Currently, this is not implemented, and it can break the protocol.

- Create an actual test bench, where the generated output is checked based on timing and content, rather than verifying everything manually with a waveform.

- Add the hardware transmitter to the project.

Pitfalls and gotchas

- Originally, I planned to write 24 bits to the FIFO on the analog side, and read 32 bits with the FSM. Turns out this is not possible, so I had to go with writing 24 bits and reading 96, and putting a ‘gearbox’ between the FIFO and the FSM. Everything MUST run on the same clock (because of timing and skew), so it works by setting the FIFO’s read enable pin once every three reads from the FSM.

- Because video data can arrive any time after the start-of-frame, we want the first 32 bits of valid video data available immediately. This is why the FIFO implements First-Word Fall-through, instead of requiring a read-enable pulse for the first word.

- To cut down on latency, the gearbox implements a mux to slice up the 96 bits into three times 32, instead of a data-holding element that is updated on every read. This also goes along with the point above.

- The start_frame pulse of the timing checker is generated with the incoming clock, but the FSM runs on the reference clock. That is why it is buffered with a single-shot resettable edge trigger. It is set by the pulse, and reset by the FSM once it has acted upon the signal.

- Modularization is key!

Workplan reflection

I originally planned to work on this throughout the semester, with the intention of having a finalized transmitter by Christmas. But, being an exchange student, I didn't fully realize how heavy the workload of four Olin classes would be. This caused my work on this to get pushed back. I was very glad to have gotten the opportunity to work on this as a CompArch final project, so that I don't have to go home empty-handed.

As far as the work for the final project goes, I'm actually quite pleased with my progress. There are certain bits lacking, but generally speaking, I have functional modules and a good system-level design, so that there is only fine-tuning left (and the implementation of CRC, but I will have to see if a core for that is not already available internally).

In general, I'd say mission accomplished :)